I received the PhD degree in signal and information processing from the Dalian University of Technology (DLUT) , Dalian, China in 2024.

My research interests include 3D computer vision, Robotic manipulation and Robotic system engineering.

WeChat: pengwanli2820

Publications

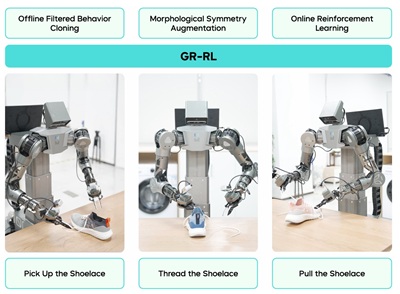

A robotic learning framework that turns a generalist vision-language-action (VLA) policy into a highly capable specialist for long-horizon dexterous manipulation..

Author List:Yunfei Li, Xiao Ma, Jiafeng Xu, Yu Cui, Zhongren Cui, Zhigang Han, Liqun Huang, Tao Kong, Yuxiao Liu, Hao Niu, Wanli Peng , Jingchao Qiao, Zeyu Ren, Haixin Shi, Zhi Su, Jiawen Tian, Yuyang Xiao, Shenyu Zhang, Liwei Zheng, Hang Li, Yonghui Wu

Bytedance Seed Robotics

Webpage •

Paper

A Generalizable and Robust Vision-Language-Action (VLA) Model for Long-Horizon and Dexterous Tasks.

Author List: Chilam Cheang, Sijin Chen, Zhongren Cui, Yingdong Hu, Liqun Huang, Tao Kong, Hang Li, Yifeng Li, Yuxiao Liu, Xiao Ma, Hao Niu, Wenxuan Ou, Wanli Peng , Zeyu Ren, Haixin Shi, Jiawen Tian, Hongtao Wu, Xin Xiao, Yuyang Xiao, Jiafeng Xu, Yichu Yang

Bytedance Seed Robotics

Webpage •

Paper

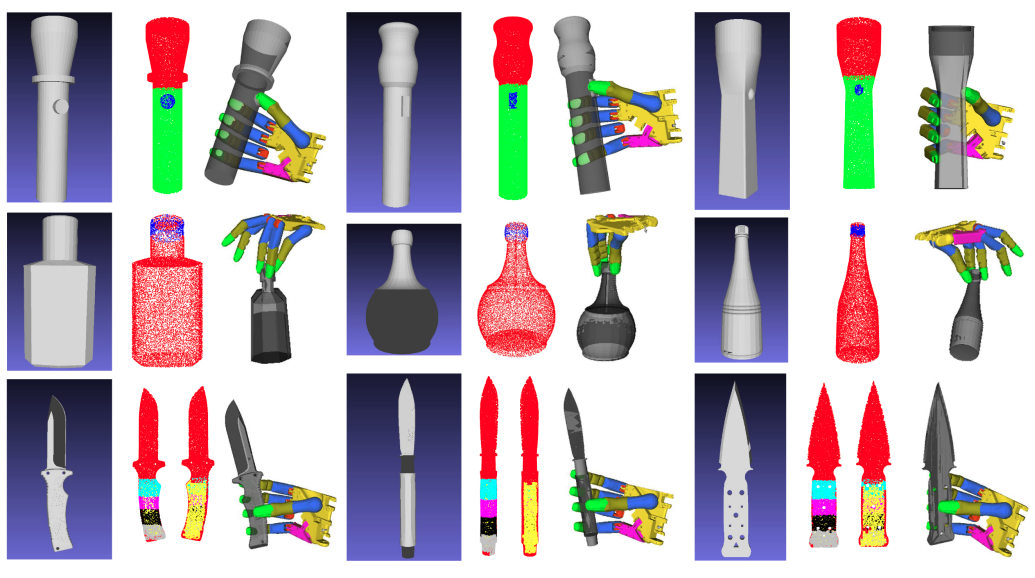

We propose a category-level multi-fingered functional grasp transfer framework.

Rina Wu, Tianqiang Zhu, Wanli Peng , Jinglue Hang, Yi Sun*

RAL, 2023

Paper •

A semantic representation of functional hand-object interaction is introduced without labeling 3D hand poses, and a novel coarse-to-fine grasp generation network is designed to model this hand-object interaction.

Yibiao Zhang,Jinglue Hang, Tianqiang Zhu, Xiangbo Lin*, Rina Wu, Wanli Peng , Dongying Tian, Yi Sun

RAL, 2023

Paper •

Code

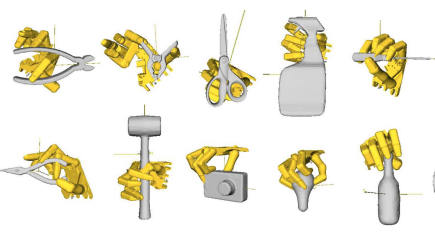

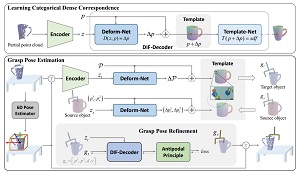

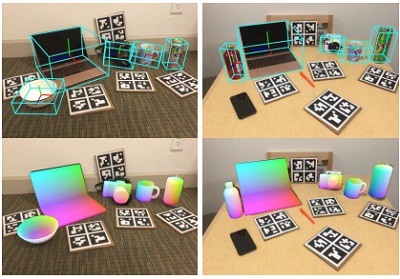

We propose TransGrasp, a category-level grasp pose estimation method that predicts grasp poses of a category of objects by labeling only one object instance.

Hongtao Wen, Jianhang Yan, Wanli Peng*, Yi Sun

ECCV, 2022

Webpage •

Paper •

Code

A self-supervised method for category-level 6D pose estimation, SSC-6D, which can predict unseen object poses without explicit pose annotations and exact 3D models in real scenarios for training.

Wanli Peng, Jianhang Yan, Hongtao Wen, Yi Sun*

AAAI, 2022

Webpage •

Paper •

Code